When UTM Parameters Go Rogue: Indirect Prompt Injection in AI Assistants

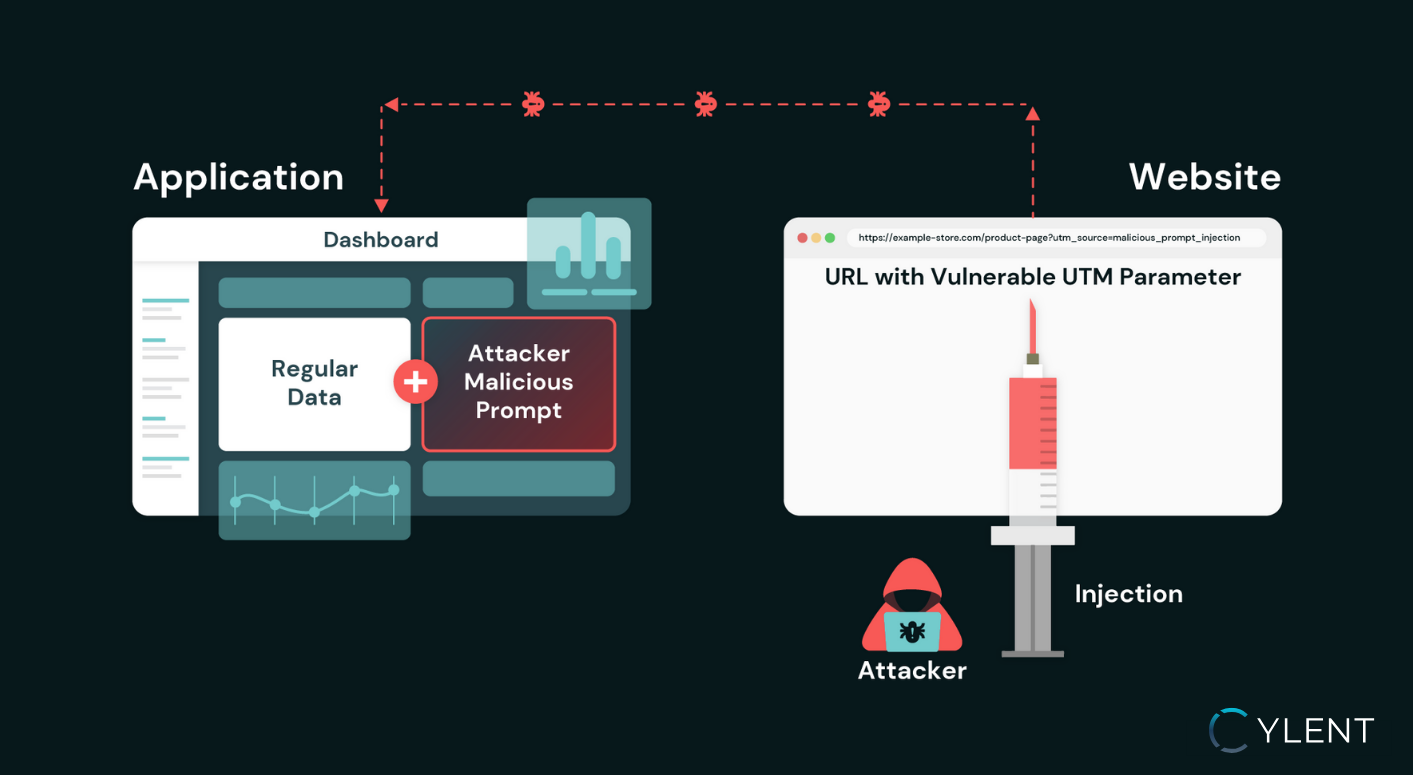

As AI-powered features become embedded into modern applications, their reliance on external data sources introduces new attack surfaces. One of the fastest-growing threats is Indirect Prompt Injection—a technique where attackers manipulate inputs that an AI system later consumes.

During a recent penetration test of a data analytics SaaS platform, Cylent Security identified a critical flaw that allowed attackers to poison an AI Assistant by injecting malicious prompts through nothing more than a UTM parameter. The result? The AI supplied false analytics to business users, undermining decision-making and eroding trust.

This post explains how the attack worked, the root cause of the vulnerability, and the secure design practices that can prevent it.

The Exploit: UTM Parameter as an Indirect Injection Vector

What’s a UTM Parameter?

UTM (Urchin Tracking Module) parameters are small snippets added to the end of a URL (e.g., ?utm_source=google&utm_campaign=summer_sale) that help marketers track where website traffic is coming from.

In e-commerce, they show which ads or campaigns drive sales, making them critical for business analytics. But because UTM parameters are user-controlled inputs, attackers can manipulate them—turning a simple tracking tool into an unexpected security risk.

Exploitation Steps

- UTM Parameter Reflection - UTM parameters, normally used to track marketing traffic in e-commerce, were reflected in the platform’s dashboard.

- AI Assistant Parsing - The AI Assistant automatically parsed this reflected data as input for its analysis.

- Injection of Arbitrary Prompts - By crafting a malicious UTM parameter, we—acting as non-authenticated attackers—inserted custom instructions. The AI processed these as legitimate context, altering its responses.

Outcome

Users saw false analytics reports, leading to misguided marketing spend and the potential for severe business impact.

Root Cause Analysis

- Unfiltered Input Reflection

UTM parameters were reflected without sanitization, enabling injection of arbitrary content. - Lack of Context Isolation in AI Parsing

The AI failed to distinguish between trusted input and untrusted sources, leaving it vulnerable to manipulation.

Mitigation and Best Practices

- Sanitize and Validate User Input - Filter and validate all external data sources—including UTM parameters—before reflecting them in dashboards or passing to AI systems.

- Enforce Context Isolation - AI features should only process data from verified sources with strict input boundaries.

- Whitelist Trusted Sources - Define and enforce a whitelist of approved traffic sources (e.g., only recognized marketing platforms). This prevents arbitrary or malicious UTM parameters from being ingested by your application and later consumed by the AI Assistant.

- LLM as a Judge - Introduce a “proxy LLM” layer that reviews and classifies inputs before they reach the main AI system. This secondary model can act as a filter against prompt injection attempts, flagging suspicious or manipulative instructions embedded in user-controlled data.

- Conduct AI Security Audits - Regular penetration testing for AI applications can uncover prompt injection and other indirect attack paths that traditional testing misses.

Why Secure Design Matters in AI Applications

AI features are only as reliable as the data they consume. Without security by design, businesses risk analytics poisoning, customer mistrust, and financial loss.

At Cylent Security, our team specializes in AI security testing and secure design reviews. We’ve uncovered real-world cases like this Indirect Prompt Injection via UTM parameters, helping SaaS companies protect their users and ensure trustworthy AI insights.

Don’t wait until attackers find the gap. Contact us to securely design your applications from day one.